logistic回归+糖尿病数据集

from sklearn.datasets import make_blobsx,y=make_blobs(n_samples=200,n_features=2,centers=2,random_state=8)#可视化import matplotlib.pyplot as plt%matplotlib inlineplt.scatter(x[:,0],x[:,1],c=y,cmap=plt.cm

·

from sklearn.datasets import make_blobs

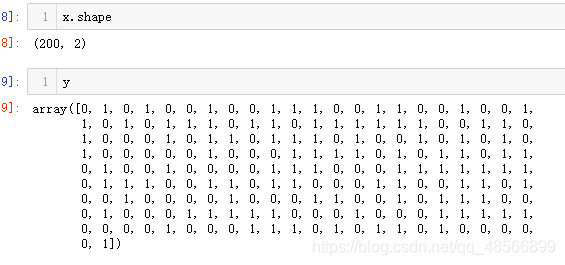

x,y=make_blobs(n_samples=200,n_features=2,centers=2,random_state=8)

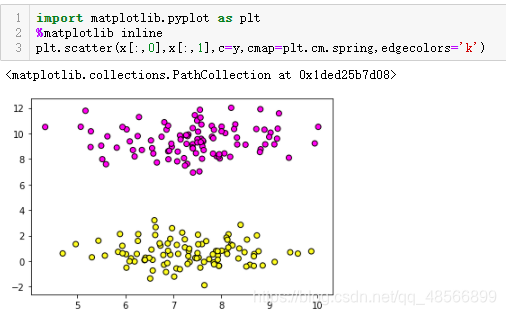

#可视化

import matplotlib.pyplot as plt

%matplotlib inline

plt.scatter(x[:,0],x[:,1],c=y,cmap=plt.cm.spring,edgecolors='k')

梯度下降法实现逻辑回归

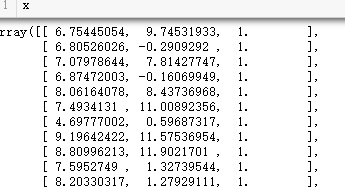

# 添加全1列

import numpy as np

x_ones=np.ones((x.shape[0],1))

x=np.hstack((x,x_ones))

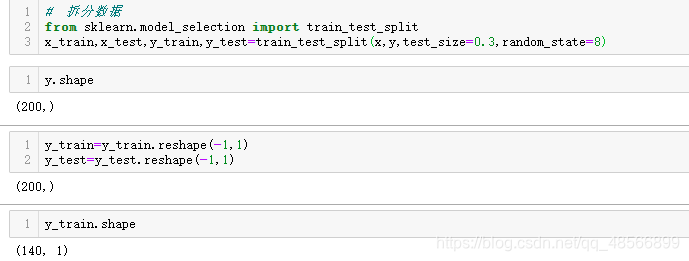

# 拆分数据

from sklearn.model_selection import train_test_split

x_train,x_test,y_train,y_test=train_test_split(x,y,

test_size=0.3,random_state=8)

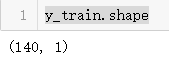

#将因变量转为列向量

y_train=y_train.reshape(-1,1)

y_test=y_test.reshape(-1,1)

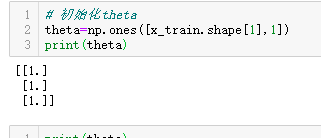

# 初始化theta

theta=np.ones([x_train.shape[1],1])

print(theta)

# 设置步长值

alpha=0.001

# 定义sigmoid函数

def segmoid(z):

s=1.0/(1+np.exp(-z))

return s

h=sigmoid(np.dot(x_train,theta))

m=140

num_iters=10000

for i in range(num_iters):

h=sigmoid(np.dot(x_train,theta))

theta=theta-alpha*np.dot(x_train.T,(h-y_train))/m

print(theta)

[[ 0.65443683]

[-1.1828222 ]

[ 0.97980398]]

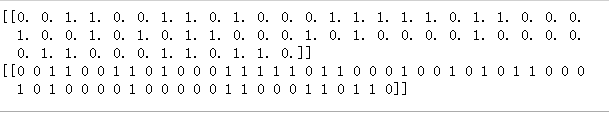

# 预测

pred_y=sigmoid(np.dot(x_test,theta))

# 预测结果二值化

pred_y[pred_y>0.5]=1

pred_y[pred_y<=0.5]=0

print(pred_y.reshape(1,-1))

print(y_test.reshape(1,-1))

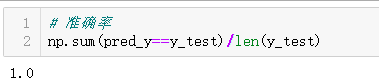

# 准确率

np.sum(pred_y==y_test)/len(y_test)

糖尿病数据集实战

data=np.loadtxt(r'pima-indians-diabetes.data.csv',delimiter=',',skiprows=1,dtype=np.float)

# 分离特征变量,和分类变量

x=data[:,:-1]

y=data[:,-1]

# 特征标准化

mu=x.mean(axis=0)

std=x.std(axis=0)

x=(x-mu)/std

# 添加全1列

x_ones=np.ones((x.shape[0],1))

x=np.hstack((x,x_ones))

#拆分

x_train,x_test,y_train,y_test=train_test_split(x,y,test_size=0.3,random_state=16)

#将因变量转为列向量

y_train=y_train.reshape(-1,1)

y_test=y_test.reshape(-1,1)

# 初始化theta

theta=np.ones([x_train.shape[1],1])

# print(theta)

# 设置步长值

alpha=0.001

# 定义sigmoid函数

def sigmoid(z):

s=1.0/(1+np.exp(-z))

return s

h=sigmoid(np.dot(x_train,theta))

# m是x 有多少数据,x_train.shape[0]

m=140

num_iters=10000

for i in range(num_iters):

h=sigmoid(np.dot(x_train,theta))

theta=theta-alpha*np.dot(x_train.T,(h-y_train))/m

print(theta)

# 预测

pred_y=sigmoid(np.dot(x_test,theta))

# 预测结果二值化

pred_y[pred_y>0.5]=1

pred_y[pred_y<=0.5]=0

print(pred_y.reshape(1,-1))

print(y_test.reshape(1,-1))

np.sum(pred_y==y_test)/len(y_test)

准确率

魔乐社区(Modelers.cn) 是一个中立、公益的人工智能社区,提供人工智能工具、模型、数据的托管、展示与应用协同服务,为人工智能开发及爱好者搭建开放的学习交流平台。社区通过理事会方式运作,由全产业链共同建设、共同运营、共同享有,推动国产AI生态繁荣发展。

更多推荐

已为社区贡献2条内容

已为社区贡献2条内容

所有评论(0)