使用PyTorch框架进行训练——裂缝检测语义分割数据集 并提供完整的代码示例

使用PyTorch框架进行训练——裂缝检测语义分割数据集 并提供完整的代码示例

·

将使用PyTorch框架进行训练,并提供完整的代码示例。

数据集准备

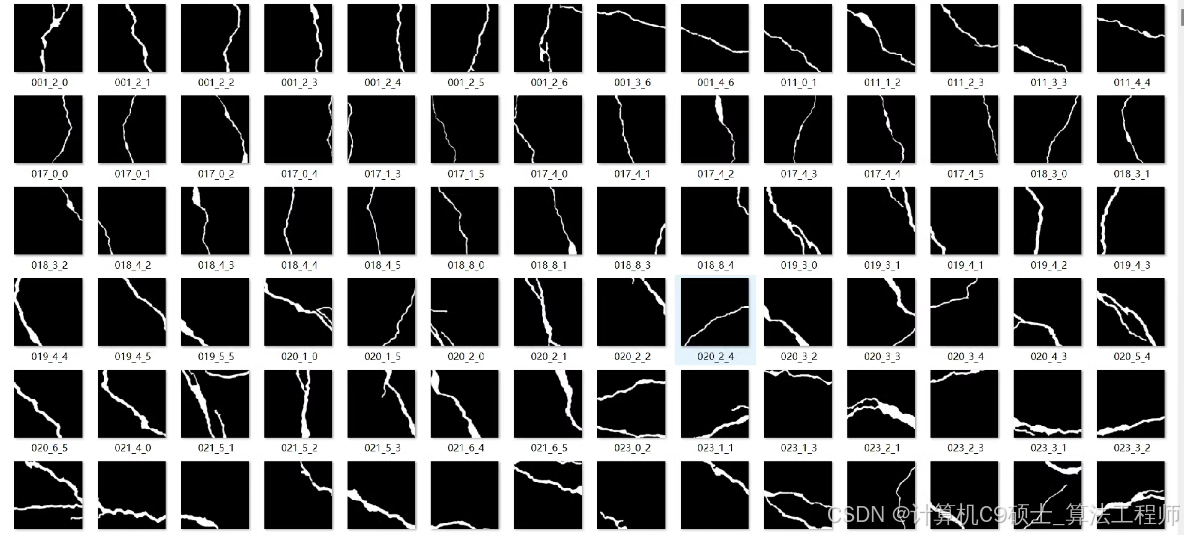

假设你的数据集包含2932张裂缝检测图像及其对应的标签图片,每一张都是原图和标签图片配对的形式。你需要将这些图像处理成统一的尺寸,即448x448像素。

目录结构

你的数据集目录可能如下所示:

crack_dataset/

├── images/

│ ├── image1.jpg

│ ├── image2.jpg

│ └── ...

├── masks/

│ ├── mask1.png

│ ├── mask2.png

│ └── ...

└── README.txt # 数据说明其中:

images/存放原图。masks/存放标签图片。

数据加载器

我们需要定义一个数据加载器来读取图像和标签掩膜:

import torch

from torch.utils.data import Dataset, DataLoader

from torchvision import transforms

import os

import numpy as np

from PIL import Image

class CrackDetectionDataset(Dataset):

def __init__(self, images_dir, masks_dir, transform=None):

self.images_dir = images_dir

self.masks_dir = masks_dir

self.transform = transform

self.image_filenames = [os.path.join(images_dir, f) for f in sorted(os.listdir(images_dir))]

self.mask_filenames = [os.path.join(masks_dir, f) for f in sorted(os.listdir(masks_dir))]

def __len__(self):

return len(self.image_filenames)

def __getitem__(self, idx):

if torch.is_tensor(idx):

idx = idx.tolist()

img_name = self.image_filenames[idx]

mask_name = self.mask_filenames[idx]

image = Image.open(img_name).convert("RGB")

mask = Image.open(mask_name).convert("L")

if self.transform:

image = self.transform(image)

mask = self.transform(mask)

return image, mask

# 示例转换

transform = transforms.Compose([

transforms.Resize((448, 448)),

transforms.ToTensor(),

])

# 创建数据集实例

dataset = CrackDetectionDataset(

images_dir="path/to/crack_dataset/images/",

masks_dir="path/to/crack_dataset/masks/",

transform=transform

)

# 创建数据加载器

dataloader = DataLoader(dataset, batch_size=4, shuffle=True, num_workers=4)模型定义

我们可以使用U-Net作为基础模型来进行语义分割:

import torch.nn as nn

from collections import OrderedDict

class UNet(nn.Module):

def __init__(self, in_channels=3, out_channels=1, init_features=32):

super(UNet, self).__init__()

features = init_features

self.encoder1 = UNet._block(in_channels, features, name="enc1")

self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.encoder2 = UNet._block(features, features * 2, name="enc2")

self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.encoder3 = UNet._block(features * 2, features * 4, name="enc3")

self.pool3 = nn.MaxPool2d(kernel_size=2, stride=2)

self.encoder4 = UNet._block(features * 4, features * 8, name="enc4")

self.pool4 = nn.MaxPool2d(kernel_size=2, stride=2)

self.bottleneck = UNet._block(features * 8, features * 16, name="bottleneck")

self.upconv4 = nn.ConvTranspose2d(

features * 16, features * 8, kernel_size=2, stride=2

)

self.decoder4 = UNet._block((features * 8) * 2, features * 8, name="dec4")

self.upconv3 = nn.ConvTranspose2d(

features * 8, features * 4, kernel_size=2, stride=2

)

self.decoder3 = UNet._block((features * 4) * 2, features * 4, name="dec3")

self.upconv2 = nn.ConvTranspose2d(

features * 4, features * 2, kernel_size=2, stride=2

)

self.decoder2 = UNet._block((features * 2) * 2, features * 2, name="dec2")

self.upconv1 = nn.ConvTranspose2d(

features * 2, features, kernel_size=2, stride=2

)

self.decoder1 = UNet._block(features * 2, features, name="dec1")

self.conv = nn.Conv2d(

in_channels=features, out_channels=out_channels, kernel_size=1

)

def forward(self, x):

enc1 = self.encoder1(x)

enc2 = self.encoder2(self.pool1(enc1))

enc3 = self.encoder3(self.pool2(enc2))

enc4 = self.encoder4(self.pool3(enc3))

bottleneck = self.bottleneck(self.pool4(enc4))

dec4 = self.upconv4(bottleneck)

dec4 = torch.cat((dec4, enc4), dim=1)

dec4 = self.decoder4(dec4)

dec3 = self.upconv3(dec4)

dec3 = torch.cat((dec3, enc3), dim=1)

dec3 = self.decoder3(dec3)

dec2 = self.upconv2(dec3)

dec2 = torch.cat((dec2, enc2), dim=1)

dec2 = self.decoder2(dec2)

dec1 = self.upconv1(dec2)

dec1 = torch.cat((dec1, enc1), dim=1)

dec1 = self.decoder1(dec1)

return torch.sigmoid(self.conv(dec1))

@staticmethod

def _block(in_channels, features, name):

return nn.Sequential(

OrderedDict(

[

(

name + "conv1",

nn.Conv2d(

in_channels=in_channels,

out_channels=features,

kernel_size=3,

padding=1,

bias=False,

),

),

(name + "norm1", nn.BatchNorm2d(num_features=features)),

(name + "relu1", nn.ReLU(inplace=True)),

(

name + "conv2",

nn.Conv2d(

in_channels=features,

out_channels=features,

kernel_size=3,

padding=1,

bias=False,

),

),

(name + "norm2", nn.BatchNorm2d(num_features=features)),

(name + "relu2", nn.ReLU(inplace=True)),

]

)

)

# 实例化模型

model = UNet().cuda()模型训练

接下来定义训练循环:

import torch.optim as optim

# 设置损失函数和优化器

criterion = nn.BCEWithLogitsLoss() # 二分类交叉熵损失

optimizer = optim.Adam(model.parameters(), lr=0.001)

num_epochs = 100

for epoch in range(num_epochs):

model.train()

running_loss = 0.0

for inputs, labels in dataloader:

inputs, labels = inputs.cuda(), labels.cuda()

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels.unsqueeze(1).float())

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

epoch_loss = running_loss / len(dataloader.dataset)

print(f'Epoch {epoch+1}/{num_epochs}, Loss: {epoch_loss:.4f}')模型评估

在训练完成后,我们需要评估模型的性能:

# 在验证集上评估模型

model.eval()

with torch.no_grad():

correct = 0

total = 0

for inputs, labels in dataloader:

inputs, labels = inputs.cuda(), labels.cuda()

outputs = model(inputs)

predicted = (outputs > 0.5).float()

total += labels.size(0)

correct += (predicted == labels.unsqueeze(1)).sum().item()

print(f'Accuracy of the network on the dataset: {100 * correct / total:.2f}%')模型预测

下面是一个使用训练好的模型进行预测的Python脚本示例:

import cv2

def predict_cracks(model, image_path, save_dir='results'):

# 加载图像

img = Image.open(image_path).convert("RGB")

# 应用相同的转换

if transform:

img = transform(img)

# 添加批次维度

img = img.unsqueeze(0).cuda()

# 使用模型进行预测

output = model(img)

# 后处理输出

pred_mask = (output > 0.5).float().squeeze().cpu().numpy()

# 可视化结果

img_np = np.array(img.squeeze().permute(1, 2, 0).cpu())

pred_mask = cv2.cvtColor(pred_mask, cv2.COLOR_GRAY2BGR)

result = np.concatenate((img_np, pred_mask), axis=1)

# 显示结果

cv2.imshow('Result', result)

cv2.waitKey(0)

cv2.destroyAllWindows()

# 保存结果

cv2.imwrite(os.path.join(save_dir, os.path.basename(image_path)), result * 255)

if __name__ == '__main__':

model_path = 'path/to/your/best.pth' # 模型权重文件路径

image_path = 'path/to/your/image.jpg' # 测试图像路径

# 加载模型

model = UNet().cuda()

model.load_state_dict(torch.load(model_path))

model.eval()

# 进行预测

predict_cracks(model, image_path)完整的训练和预测流程

-

克隆深度学习框架:

pip install torch torchvision -

创建数据加载器:

data_loader.py -

定义模型:

model.py -

运行训练脚本:

train.py -

运行预测脚本:

predict.py

注意事项

- 数据集质量:确保数据集的质量,包括清晰度、标注准确性等。

- 模型选择:可以根据需求选择更复杂的模型或进行模型微调。

- 超参数调整:根据实际情况调整超参数,如学习率、批次大小等。

- 监控性能:训练过程中监控损失函数和准确率,确保模型收敛。

通过上述步骤,你可以使用PyTorch框架来训练一个裂缝检测的语义分割数据集,并使用训练好的模型进行预测。

魔乐社区(Modelers.cn) 是一个中立、公益的人工智能社区,提供人工智能工具、模型、数据的托管、展示与应用协同服务,为人工智能开发及爱好者搭建开放的学习交流平台。社区通过理事会方式运作,由全产业链共同建设、共同运营、共同享有,推动国产AI生态繁荣发展。

更多推荐

已为社区贡献15条内容

已为社区贡献15条内容

所有评论(0)